- Home

- Blog

- Search Engine Optimization How to Get New Site Pages Indexed as Quickly as Possible

How to Get New Site Pages Indexed as Quickly as Possible

-

7 min. read

7 min. read

-

Sam Wixted

Sam Wixted Content Writer

Content Writer

- Sam has been writing for WebFX since 2016 and focuses on UX, crafting amazing website experiences, and digital marketing In her free time, she likes to spend time on the beach, play with her cats, and go fishing with her husband.

Adding new pages can bring new traffic and visitors to your site, and they’re most effective at accomplishing this goal when they rank well in search results. In order for your content to show up in search results, though, it needs to be indexed. This means it’s in your best interest to do everything you can to get new pages indexed as quickly as possible.

Check Your Index Status With Our Google Indexing Checker

If your content isn’t indexed, your potential customers will have no way of finding it – and it won’t help your business.

That’s why in this post, I’ll explain how to get your new pages indexed quickly so that they can help you achieve your goals.

Important terms to understand

Before I get into the factors that affect your content’s ability to be indexed quickly, there are a few key terms you should know.

Bots

Bots are web crawling programs that discover and crawl pages online. They gather online documents and content, then determine how to organize them in search engine databases. Bots have to crawl your site’s content in order for it to be indexed, or appear in Google (and other search engine) results.

Bots are also known as “web crawlers” or “spiders.”

Crawling

“Crawling” refers to the process of bots going out into the virtual online world and finding new information. Bots find new information on the Internet the same way we do — by following links from one page to the next. Then, they send new information back to search engines like Google to be indexed.

Indexing

After bots bring back new online information, they process and check each page.

The main content isn’t the only thing they check, though. When they process pages, they also check for title tags, header tags, and other elements that indicate their main topic. Using this information, search engines can begin to accurately display new pages in their search results.

It’s important to note, though, that indexing has nothing to do with where in search results a page appears. That factor is determined by SEO. Learn even more about the basics of crawlability and indexability with our video, featuring one of our Internet Marketers!

Why does your site need to be indexed?

Getting your pages indexed is absolutely necessary for building your online presence.

If your site pages aren’t indexed, they won’t show up in Google search results. And considering that Google users perform over 3.5 billion searches per day, that’s a lot of missed opportunity to bring traffic to your site.

The other reasons that your site should be indexed follow in a domino effect. If your site isn’t indexed, it won’t show up in Google search results.

If your site doesn’t show up in search results, it will be difficult for users to find your site. That makes learning how to submit URLs to Google critical.

In turn, it will be hard for you to gain business, regardless of how great your content, products, or services are. If you’re investing time and resources into creating a great site, you should ensure that it’s driving the results you’re looking for as a business.

For these many reasons, it’s crucial that your site is indexed.

How can I make sure my new pages are indexed quickly?

Though the time it takes to index your new pages varies, there are a few ways you can make sure that your site is regularly crawled and your new pages show up in in SERPs as quickly as possible.

Create a sitemap

Creating a sitemap on your website is the first step in ensuring that it’s indexed quickly. Sitemaps serve as maps for bots, and help them locate new pages on your site. Not only does a sitemap give bots an outline of your site, but it helps them understand important information like how big your site is, where you’ve updated or added information, and where the most important information is stored on your website.

Creating a sitemap on your website is the first step in ensuring that it’s indexed quickly. Sitemaps serve as maps for bots, and help them locate new pages on your site. Not only does a sitemap give bots an outline of your site, but it helps them understand important information like how big your site is, where you’ve updated or added information, and where the most important information is stored on your website.

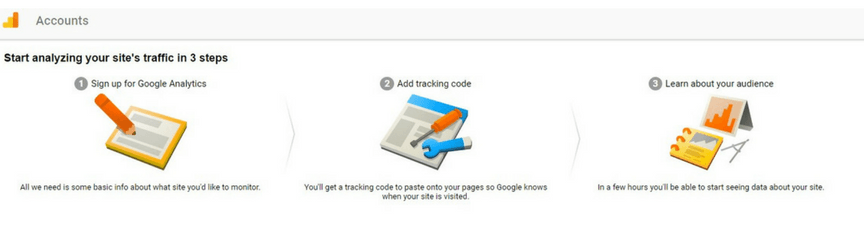

Submit your website to Google

Google Webmaster tools is the first place you should submit your website, but you’ll have to verify your site with Google Search Console first.

This makes it even easier for Google to find your website and crawl it since you are basically handing them your URL. You can also submit your sitemap to other search engines like Bing and Yahoo.

Link, link, link

Links are essential in helping bots crawl and index your site. Bots crawl your website by following links, and one way to make sure your site is indexed quickly is to build out a strong internal linking structure.

You should create links from your older pages to your new pages whenever you add them to your website. Your older pages are likely already indexed, so it’s less important to add links to them. For example, if you add a new page about a specific service, it’s helpful to add a link to that page from an older, more established page about a related service on your website.

When you link to these new pages, you make them easier for bots to find and crawl. Before long, they’ll be indexed and ready to display in search engine results.

Create and maintain a blog

Maintaining a regular blog is a great way to make sure your site is crawled and your new pages are indexed frequently. Regularly adding new content also helps to improve your site’s SEO.

Not only do regularly published blogs attract bots to your website to crawl, but your improved SEO helps your website to rank highly in SERPs.

Use robots.txt

Robots.txt is a text file that has a home in your domain, and it gives directions to bots on what they can and can’t crawl and index. The command robots.txt, when included in your domain directory, tells robots that they can crawl every page on your website. This is typically what you want.

However, sometimes you may not want bots to crawl all of your pages because you’re aware that you have duplicate content on certain pages. For example, if you’re doing an A/B test on one of your pages, you wouldn’t want all of the variations index, because they could be flagged for duplicate content. Signaling that you don’t want bots to crawl these pages ensures that you avoid any issues, and that bots focus only on the new, unique pages you want them to index.

Accumulate inbound links

Just like it’s important to link to pages within your site, it’s also extremely helpful in the indexing process to have links from other websites.

When bots crawl other sites with links to your pages, your pages will be indexed as well. Though it’s not always easy to gain inbound links, it increases the speed at which your site is indexed. This means it can be beneficial to reach out to editors, journalists, and bloggers when you publish new content that may interest their readers.

Install Google Analytics

Google Analytics is a great platform to track your website’s performance and gain analytical data.

However, it’s also known to tip off Google that a new site is coming and will need indexing. This isn’t a guaranteed tactic, but has potential to help with the indexing process – plus, there’s no good reason not to have Google Analytics installed on your site, considering that it’s one of the best free analytics platforms available.

However, it’s also known to tip off Google that a new site is coming and will need indexing. This isn’t a guaranteed tactic, but has potential to help with the indexing process – plus, there’s no good reason not to have Google Analytics installed on your site, considering that it’s one of the best free analytics platforms available.

Share your content on social media

Social media doesn’t play a direct role in your site’s pages being indexed, but can help you gain online visibility. The more people see your content, the more likely it is that it will gain traction online and earn links from other sites.

And as we mentioned above, these links can help bots locate your new pages.

Do you have any strategies for getting your new pages indexed?

Have you found a tactic or strategy that helps your content get indexed at lightning speed? We’d love to hear about them in the comments below!

-

Sam has been writing for WebFX since 2016 and focuses on UX, crafting amazing website experiences, and digital marketing In her free time, she likes to spend time on the beach, play with her cats, and go fishing with her husband.

Sam has been writing for WebFX since 2016 and focuses on UX, crafting amazing website experiences, and digital marketing In her free time, she likes to spend time on the beach, play with her cats, and go fishing with her husband. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

Try our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget

Looking for More?

Get expert ideas, industry updates, case studies, and more straight to your inbox to help you level up and get ahead.

"*" indicates required fields

Try our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget